Scheduling - Daily updates

I assumed that you already knew how to create sessions and events tables,s and now you want to schedule daily updates.

The easiest way to do it is to use Dataform's workflow configurations.

Dataform runs actions in a two steps: it compiles your Dataform project (release configuration) and then executes release configuration (workflow configuration). So you need to set up both of these steps.

Create release configuration#

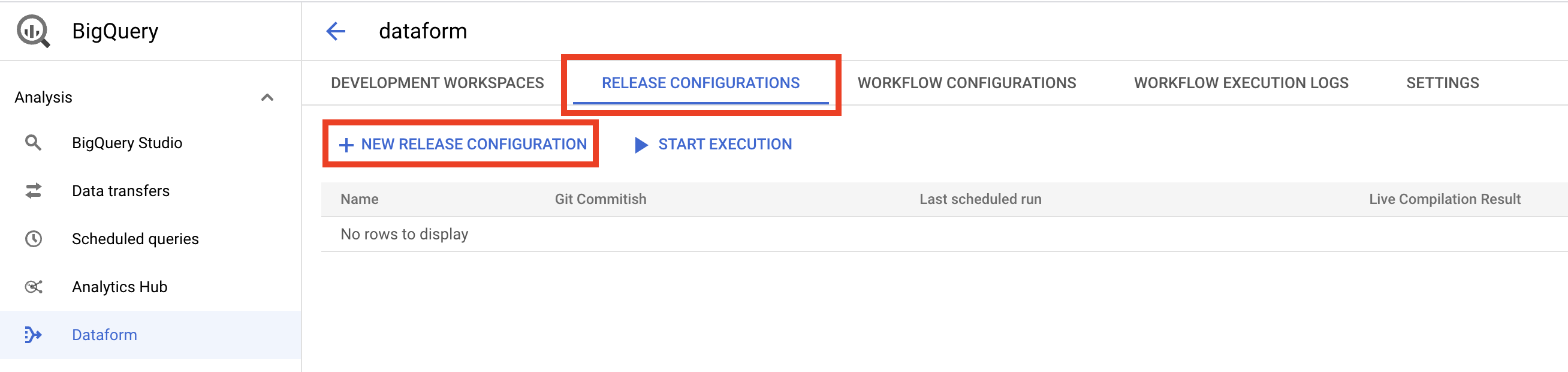

In your Dataform repository select Release configuration tab and click "New release configuration" button.

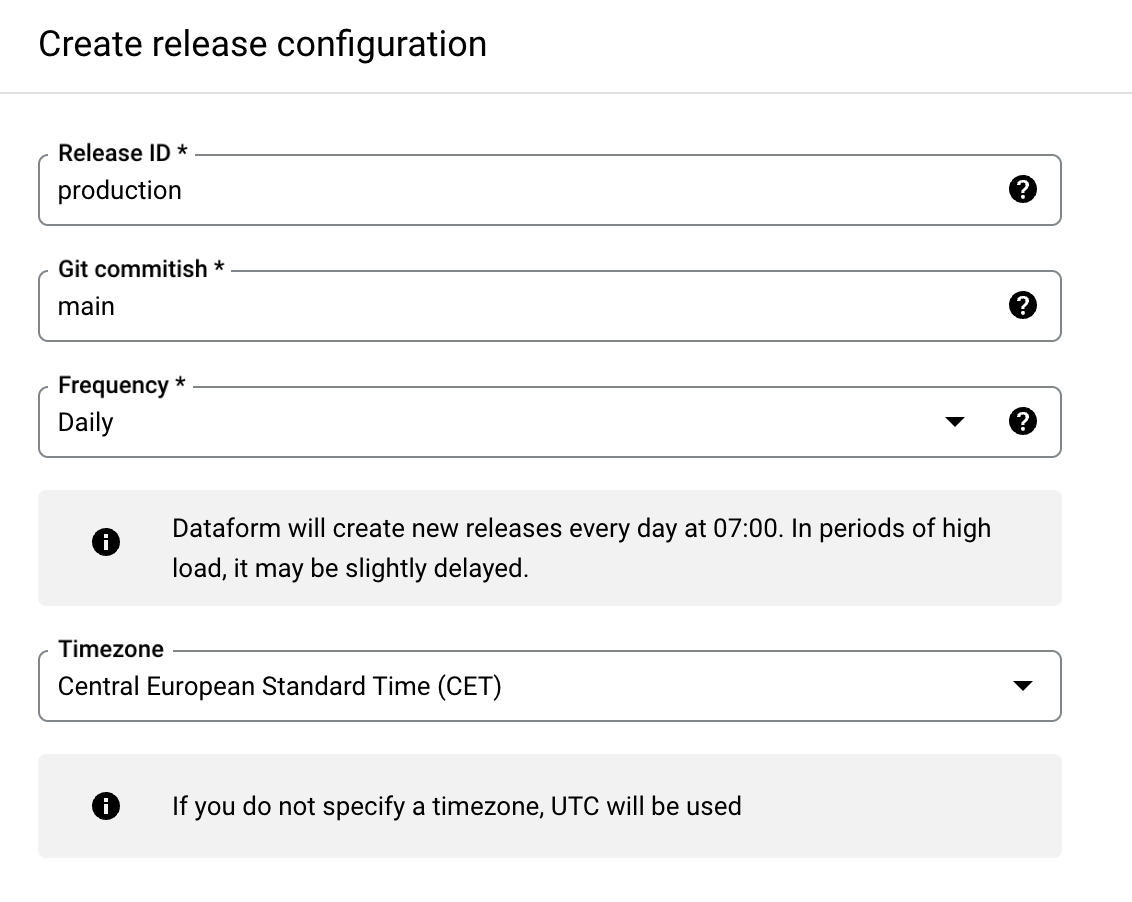

And fill out the form:

- Set Release ID (name) for something like

production - Git commitish (GitHub branch name) to

main - Frequency to

Dailyby default. It means how often Dataform should recompile your project. If you don't change your code often you could selectNeverand recompile configuration manually after your changes. - Timezone - you could change the timezone if you need to. By default it's UTC.

For this example we don't need to change Compilation overrides and Compilation variables.

Click "Create" button to create release configuration.

warning

You should commit and push your code to the target branch before scheduling. Otherwise, Dataform can't compile it

Create workflow configuration#

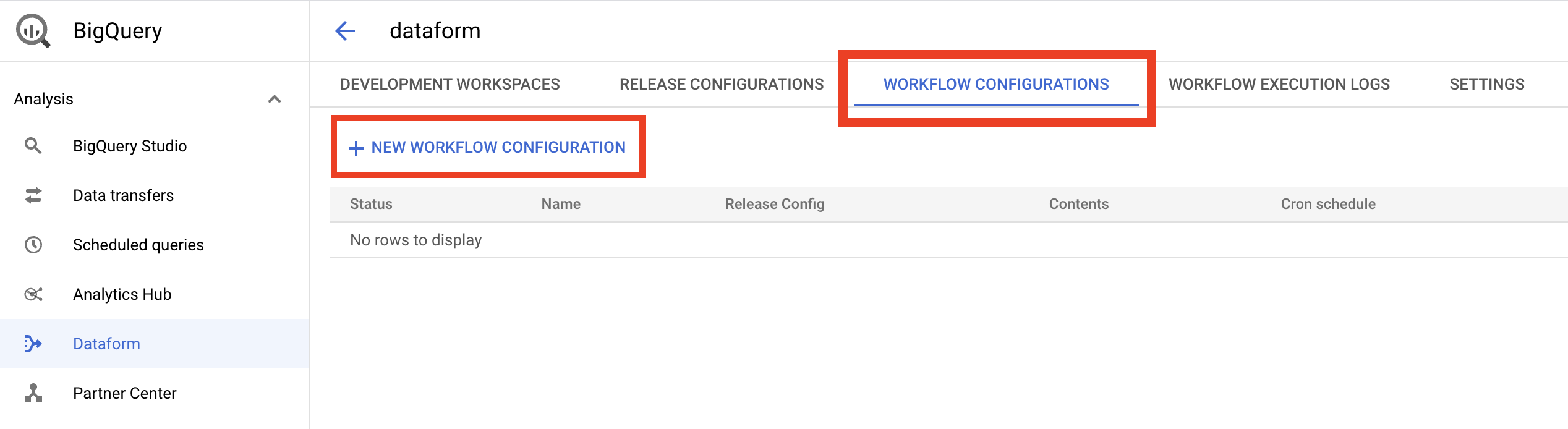

In your Dataform repository page select Workflow configuration tab and click "New workflow configuration" button.

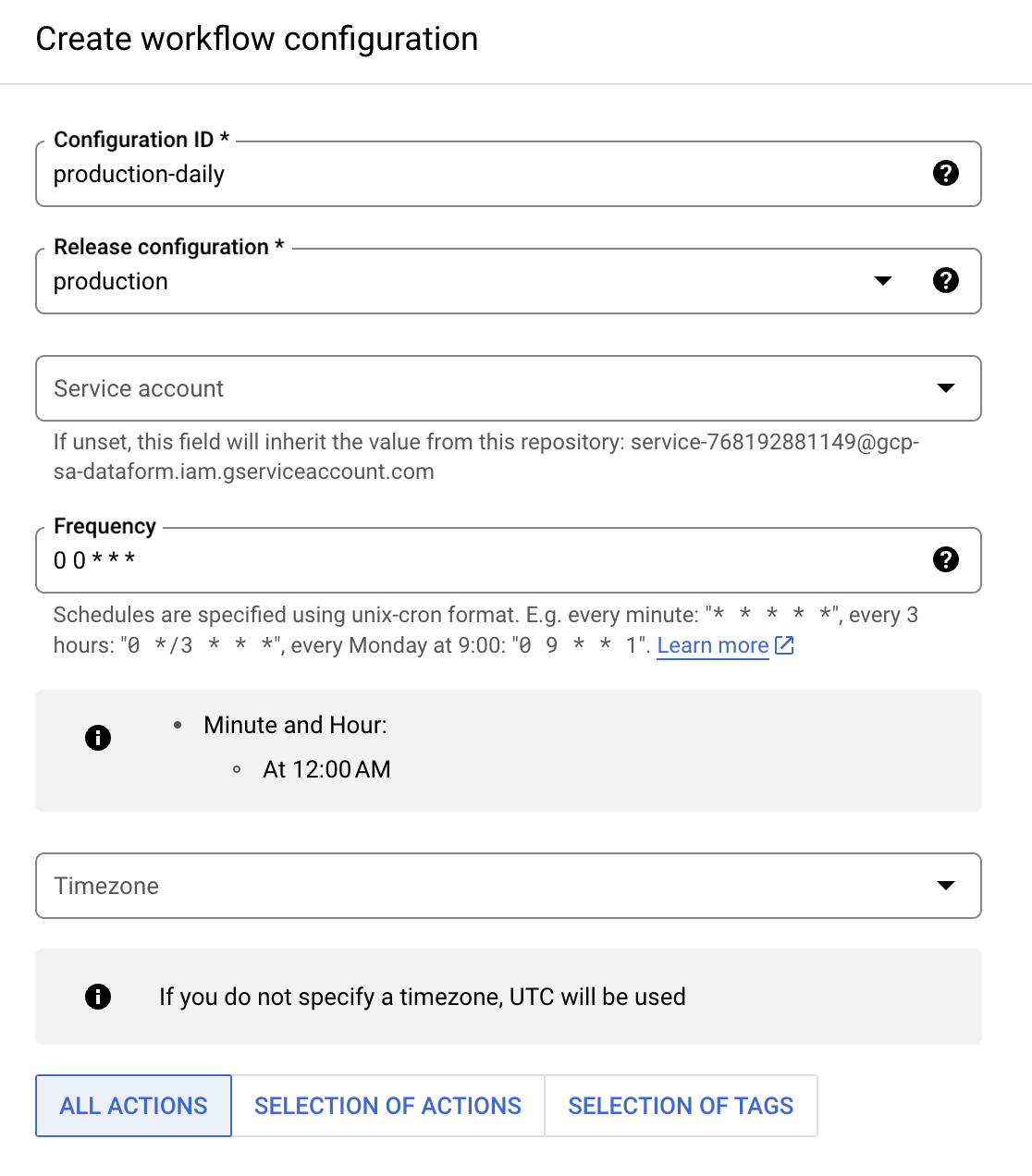

And fill out the form:

- Set Configuration ID (name) for something like

production-daily - Release configuration - Select release configuration we just created

production - Service account - you could select default service account or keep it empty.

- Frequency - set it to daily

0 0 * * *, you could provide any frequency in crontab format. Or use something like crontab.guru to generate it. - Timezone - you could change the timezone if you need to. By default it's UTC.

- Select actions you want to run. By defualt it's all actions in your Dataform project. But you could select only needed actions or tags.

Create "Create" button to create a workflow configuration.

That's all, Dataform will run your actions daily.

Change sessions and events tables#

But for daily updates you need to query only the previous date table, but sometimes GA4 delays data export for two days, so it's safe to query the last two days. You could do it like this:

const ga4 = require("dataform-ga4-sessions");

const config = { dataset: "analytics_XXXXXX", incrementalTableName: "events_*", incrementalTableEventStepWhere: "_TABLE_SUFFIX between format_date('%Y%m%d',date_sub(current_date(), interval 3 day)) and format_date('%Y%m%d',date_sub(current_date(), interval 1 day)) and contains_substr(_TABLE_SUFFIX, 'intraday') is false", nonIncrementalTableName: "events_*", nonIncrementalTableEventStepWhere: "contains_substr(_TABLE_SUFFIX, 'intraday') is false",};

const sessions = new ga4.Session(config);sessions.publish();Line by line:

- incrementalTableName: "events_*" - here we set incremental table name to

events_*so for incremental context Dataform will query all daily tables. So we need to filter them by providing were statement. - incrementalTableEventStepWhere - here we provide where statement for incremental context. We control which tables to query using

_TABLE_SUFFIX. So here set that we need to query only tables for the previous two days (excluding today date) and format dates in GA4 tables format like20231220('%Y%m%d'). and the second condition is to exclude intraday tables. It's a good practice to exclude intraday tables because they could be incomplete and save them in different tables. You could read more about intraday tables in intraday sessions tutorial. - nonIncrementalTableName: "events_*" - here we set non incremental table name to

events_*. It's mandatory, as by default the package uses the same table name. It means that for the first run we will filter all daily tables. - nonIncrementalTableEventStepyWhere - here we filter intraday tables for the first run. Again it's a good practice to exclude intraday tables because they could be incomplete.

Deside at what time to run actions#

At the moment Google doesn't guarantee that GA4 exports will be ready at the same time every day. Even for GA4 360 clients. So if you are using this scheduling method you need to decide at what time to run actions.

You could check it manually using the following query, but don't forget to change <GCP-PROJECT-ID> to your GCP project id and analytics_XXXXXX to your actual dataset name:

```javascript

```sqlSELECT table_name, creation_time, EXTRACT(hour from creation_time) as creation_hour, DATETIME_DIFF(datetime(creation_time), PARSE_DATETIME('%Y%m%d', substr(table_name, 8)),hour) as creation_delay,FROM `<GCP-PROJECT-ID>`.analytics_XXXXXX.INFORMATION_SCHEMA.TABLES as pWHERE regexp_contains(table_name, r'events_\d+')ORDER BY table_name DESCOr even get the maximum and average delay:

SELECT max(DATETIME_DIFF(datetime(creation_time), PARSE_DATETIME('%Y%m%d', substr(table_name, 8)),hour)) as max_hours_delay, round(avg(DATETIME_DIFF(datetime(creation_time), PARSE_DATETIME('%Y%m%d', substr(table_name, 8)),hour))) as avg_hours_delay,FROM `<GCP-PROJECT-ID>`.analytics_XXXXXX.INFORMATION_SCHEMA.TABLES as pWHERE regexp_contains(table_name, r'events_\d+')Usually it's something between 30 and 46 hours.

As an option you could create two workflow configurations per day. It should be safe enough, as action generated by the package creates MERGE statement and you wouldn't have doubles for the same sessions.

Or you could use a more advanced scheduling method - scheduling - intraday.